How I Learned to Stop Worrying and Love the Tests

Adopting a culture of testing on mobile is hard. If you already work with teams that are used to riding on the highs of banging out awesome features for an app and writing few to no tests, asking them to start writing tests outright might be the equivalent of a record scratch. The thought of slowing down to learn how to write tests and change the way we write code to support testing, at first, is scary. After all, we want to continue to deliver value to our customers quickly and make them happy, and adding tests initially might feel like added pressure to that!

Over the years, I’ve heard a lot of opinions for and against investing in automated tests:

“I don’t have time to write tests. I need to be able to ship code quickly.”

“Tests let me ship code more quickly and confidently.”

“Automated testing cannot test all edge cases. Testing manually is better to really verify things.”

“With automated testing, I save time by not having to manually test everything.”

These are all valid opinions! However, as you might tell by the title of this article, I’ve grown to love writing automated tests! In my experience, adopting a culture of testing takes patience, support, a desire to learn, and the will to challenge and be challenged. When introducing automated testing to engineers, I usually share my own initial experience.

Getting Thrown into the Fire of Automated Testing

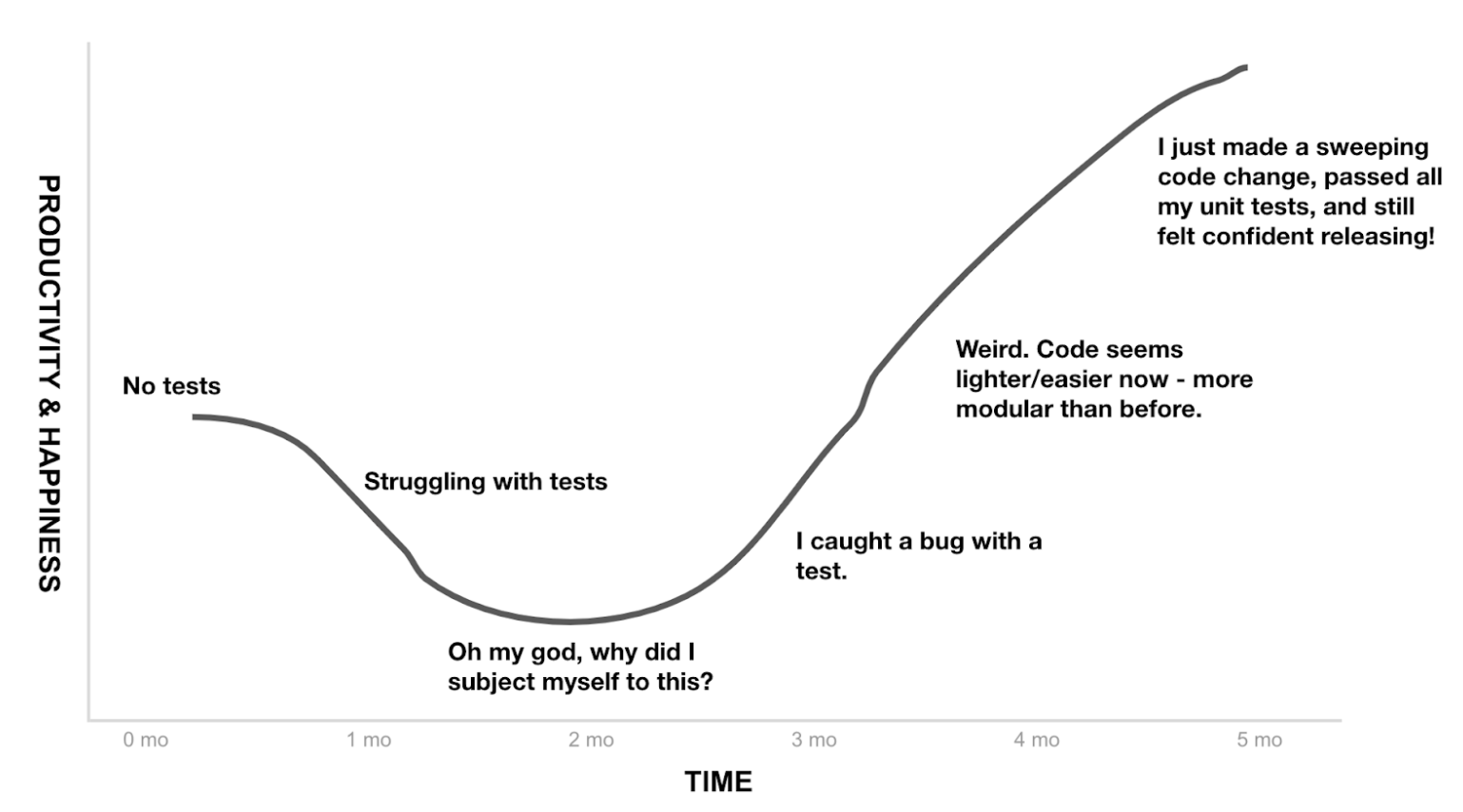

Mobile apps have complex state, view lifecycle, callbacks, and other things that make testing hard. The web has been around a lot longer than mobile and enjoys the benefits of long-established tooling and practices for testing. Because the world of mobile testing is relatively new, it takes time and patience to determine which best practices will work for your team. A former boss and mentor taught me how to approach test-driven development for iOS. As a mobile developer who didn’t have much prior knowledge or experience with writing tests, learning how and why to change the way I wrote my code to support testing was quite the mind-bender. My arc basically looked something like this:

One day, after some time refactoring my brain and code to be able to support tests, a lightbulb went off and I realized that this testing thing is worth it. I found that:

I could more clearly read my own code.

I could more easily and quickly convey what my code did to others.

I found I was spending less time frustrated with how to structure and organize my code as the codebase grew.

I found I was spending less time chasing down bugs as a result of my code.

I went from hating testing, to thoroughly enjoying it and the improved quality of my work. Ever since then, I’ve enjoyed sharing my experience with other teams, and offering suggested practices and tooling to help guide them through the tricky changes I once went through myself.

Adopting Tests for the Squarespace iOS App

When I arrived at Squarespace in 2019, the Squarespace iOS App had next to no automated tests. We decided to adopt more automated testing primarily because we wanted to reduce our reliance on manual QA. Adopting a testing culture fundamentally changed the way we worked on the Squarespace iOS App between 2019 and 2020:

In 2019:

We didn’t write automated tests.

Our code wasn’t very modular/testable.

We relied primarily on the QA team to manually validate our work.

By the end of 2020:

We wrote automated tests.

Our code became more modular/testable.

We relied primarily on engineers to validate their work.

The solution to becoming a test-driven team is part technical, part relationship-building. We took these steps to get there, and while some of them are iOS-specific, similar practices can still be beneficial when applied to other platforms:

Meeting and Coaching 1-on-1

Initially, I met 1-on-1 with every engineer on the mobile teams to get a picture of what the common feelings and pain points were with automated testing. I would generally do a pair programming session with them for 30 to 60 minutes to briefly learn about their current approach towards writing code, and start to determine entry points to help with writing more tests. The biggest thing here was to not be pushy about the tests.

When a pull request appeared without tests, I tried to find places to comment and offer suggestions on how a test might be important to help continually validate specific behavior. Often this resulted in the author writing a test to support it, and building the muscle memory of considering tests with new or refactored work. When writing a test proved to be tricky, pairing helped provide both technical and moral support to solve the problem.

Introducing New Tools and Techniques

In order to write tests with ease, the right tools and techniques need to be in place to support it. We don’t get a lot of convenient out-of-the-box tools for testing with iOS, so we adopted some handy third-party libraries and built our own set of standards to help with building our testing muscle.

Quick and Nimble, a behavior-driven testing framework and matching framework respectively, enabled us to express our tests as plain-English, human-readable documentation. This made it easier for developers to reason about how our implementation code worked. Where mocking and stubbing dependencies was necessary, we built our own protocol-driven interfaces, and leveraged the service locator pattern with Resolver to make dependency injection painless in our tests.

Tracking Code Coverage

Tracking our code coverage with SonarQube helped encourage more tests in pull requests. SonarQube flagged uncovered lines of code, and helped encourage discussion on pull requests about what we collectively felt was important to test. And while sometimes considered a vanity metric, seeing code coverage rise in general also encouraged engineers to continue to strive for quality.

Listening, Discussing, Trying, Failing, and Learning Together

Naturally, opinions and experience varied. We didn’t all agree at first on how and where to apply tests. It took an openness to share, discuss, and try different things (which we still do to this day) to become a team committed to writing tests. After initially trying and failing to write certain kinds of tests, we collaborated to determine what would enable us to write our tests and moved forward. As we learned what worked for us, we documented it: both to onboard new engineers to our new testing practices and to help us remember ourselves.

The Impact of Adopting the Tests

We primarily focused on writing a combination of unit, integration, and snapshot tests as we developed new features and made changes to existing ones. As a result of these incremental improvements:

We filed 75% fewer manual QA test requests. Engineers took more ownership of the quality of code. By writing unit and integration tests, we were able to confidently ship and manual QA most things ourselves.

We made 66% fewer hotfix releases. By writing tests for our code, we were able to confidently ship every two weeks through our release train.

In 2019, we had 9 hotfix releases.

In 2020, we had 3 hotfix releases.

In the end, our code coverage increased from 13.9% to 54.7%. While not a primary measure of success in automated testing, the significant jump showed we had made the commitment to investing a whole lot more in our test suite.

There were also some less measurable but important things that improved developer efficiency:

Our code became more modular. Writing unit tests guided us towards creating smaller, more focused interfaces, which improved our overall architecture. For example, we added onto our use of Model-View-ViewModel by creating new discrete routing interfaces for each screen, which separated routing logic from our view models, making our code easier to test and understand.

We just plain felt more confident in our code. Authors and reviewers in pull requests could more easily reason about why code would work after merge.

We adopted new and improved techniques.

Mocking and stubbing dependencies

Better dependency injection via the service locator pattern

A router pattern to organize setting up and navigating screens.

Individual app configuration initializers

Our most complex component, the page editor, adopted more modular view and JavaScript bridging configurations

Test specs became documentation. This helped engineers more easily and quickly understand how production code worked.

The Long, Patient Journey

When I share any presentation with teams on adopting various practices in testing, I often include a slide with the following copy:

This has been my experience every time, and I try to remind both myself and teams that good work in testing takes time, both in implementation and seeing the results that make it worth it (hopefully without being too preachy)!

Acknowledgements

Every single person on all the mobile teams across all platforms contributed to our adoption of tests on the Squarespace iOS App. Their thoughts, feedback, and experience were invaluable to supporting and making these changes happen.