How We Reimagined A/B Testing at Squarespace

Introduction

Developing great products isn’t just a matter of engineering; it’s also about developing an understanding of your users and their needs. At Squarespace, we’re always striving to create the best products possible, which requires us to constantly learn more about our users. A/B Testing is one of the most powerful tools in this regard, and over the past year, we’ve been turning A/B testing into a simple, repeatable process that scales with our product aspirations.

The key changes we made were:

Unified Testing Infrastructure: Giving the team an easy way to create new tests and providing self-service BI tools to product analysts.

Fostering a Culture of Experimentation: Promoting A/B testing as a way of thinking and empowering Product to incorporate testing into faster, more iterative development cycles.

In this blog post, we’ll dive into the tooling and culture improvements we made and as a result how A/B testing at Squarespace became faster, more accurate, and more impactful.

Unified A/B Testing Infrastructure

Our goal for tooling was simple: go from ad-hoc, disparate processes to systematic, repeatable ones. For example, as engineering teams started running A/B tests, they each implemented their own solutions, leading to a proliferation of assignment tools and data pipelines. Since this meant the data lived in different places, reporting was inconsistent and analysis was bespoke. Helping the organization migrate onto a single pipeline was table stakes before everything else. Teams from across the Engineering organization came together to collaborate on the tools that would be necessary to build a test-driven culture.

After over a year of work, three main pieces of technology came together to form the backbone of our unified experimental framework:

Praetor, our in-house experiment assignment framework

Amplitude, our product analytics tool

Horseradish, our in-house statistics and data visualization library

Praetor (Experimental Assignments)

A/B tests in different parts of the product have different requirements. This resulted in a variety of bespoke tools, each serving narrow use cases. For example, one framework was for anonymous visitors (i.e., on our homepage or help center) and another was for logged-in users (e.g. in trial). To unify testing processes and reduce maintenance burden, we created Praetor, a service that abstracts away these different test “subjects” and allows product engineers to easily implement A/B test assignments, modify test settings without code deploys, and monitor any currently running tests.

Amplitude (Product Analytics)

To truly democratize data, stakeholders need to be able to access product usage data easily, without requiring deep institutional knowledge or advanced technical skills. Our third-party product analytics platform, Amplitude, provides real-time access to product usage data for product managers, designers, engineers, and anyone else in the organization. Amplitude is an essential part of the product development cycle, empowering the entire organization to form their own hypotheses, monitor feature usage, and track KPIs.

Horseradish (Statistics)

To standardize A/B test evaluation, Data Science created an opinionated in-house library (Horseradish) for common data transformations, visualizations, and statistical methods. No more debates on Frequentist vs. Bayesian, p-values, or how to incorporate priors - Horseradish abstracts away the complicated mathematics and allows tests to be self-service, even for particularly elaborate or complicated tests.

A Culture of Experimentation

A successful A/B testing program isn’t defined by its tooling or statistical techniques; rather, what makes it successful is having a culture where A/B testing is an intrinsic and valued part of the development cycle. At Squarespace, we have three main goals:

Promote a culture of iterative, hypothesis-driven development

Provide self-service tooling and training to enable decentralized execution of A/B tests

Make sure everyone is committed to generating common artifacts and documentation

Iterative, Hypothesis-Driven Development

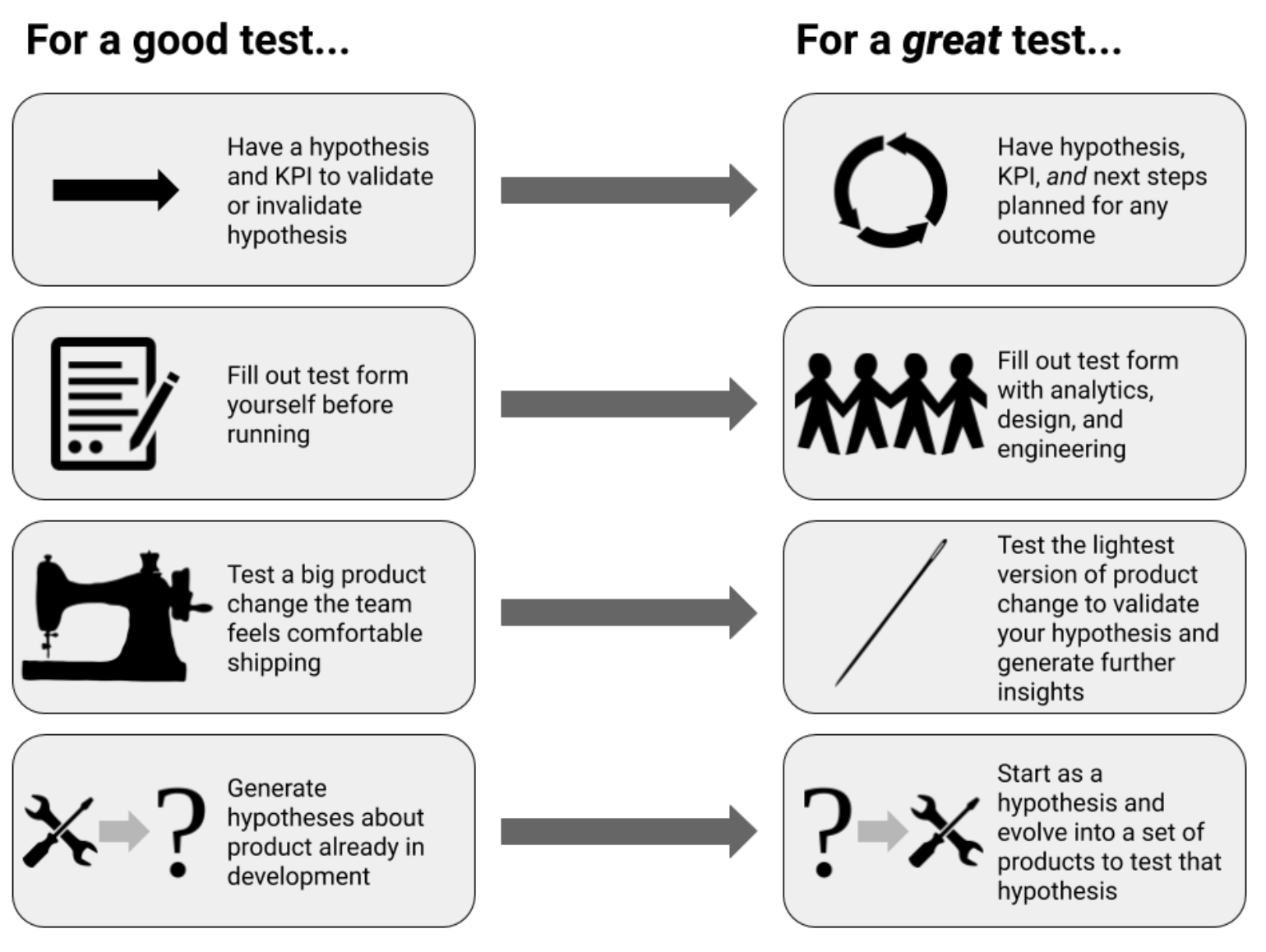

Our vision is to promote a culture of iterative, hypothesis-driven development. Instead of building features based on what we think users need, we validate these assumptions via A/B testing and use these learnings to continually refine how we think about our users. Each new hypothesis should build on the conclusions of prior tests. For example, here is a fictional series of tests where we learn about how to best educate users on how to use the product:

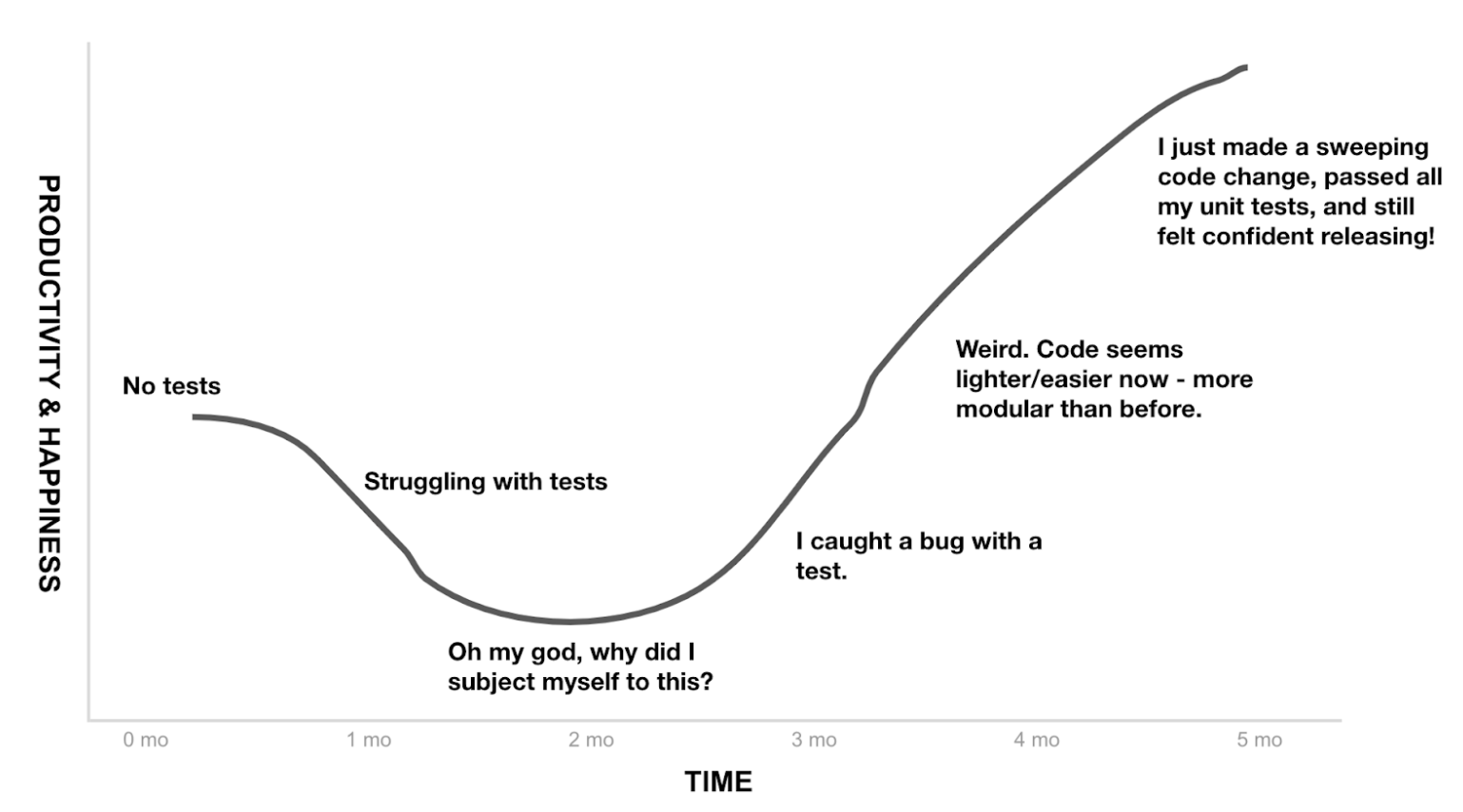

Transitioning to this style of development is hard - it requires teams to totally re-frame how we think about problems. There are a few things that we found particularly important when upgrading our A/B testing program:

Decentralized Execution of A/B Tests

To enable an iterative, agile testing process, we opted to decentralize the execution of A/B tests. We follow a roughly hybrid model, with Data Science and Data Engineering providing tools, training, best practices, and occasional hands-on support, but with the bulk of the actual execution in the hands of analysts, PMs, and product engineers. Data Science still consults on test design (e.g. deciding how to measure success), but the test execution (e.g. implementing tracking or calculating results) can be completely hands-off.

We attribute our success here to a few factors:

Widespread Data Fluency: Data fluency is a culmination of different practices — quarterly OKRs, well-understood company KPIs, access to reliable data, regular exposure to insights and findings, etc. These provide the foundation for teams to run tests independently.

Evangelizing Experimentation: With strong points of view on how experiments should be run, the Data team conducted regular training sessions and provided guidance to product teams across the organization.

Mature Self-Service Tools: The tools described in the previous section (Amplitude, Praetor, Horseradish) were fully adopted by most product teams.

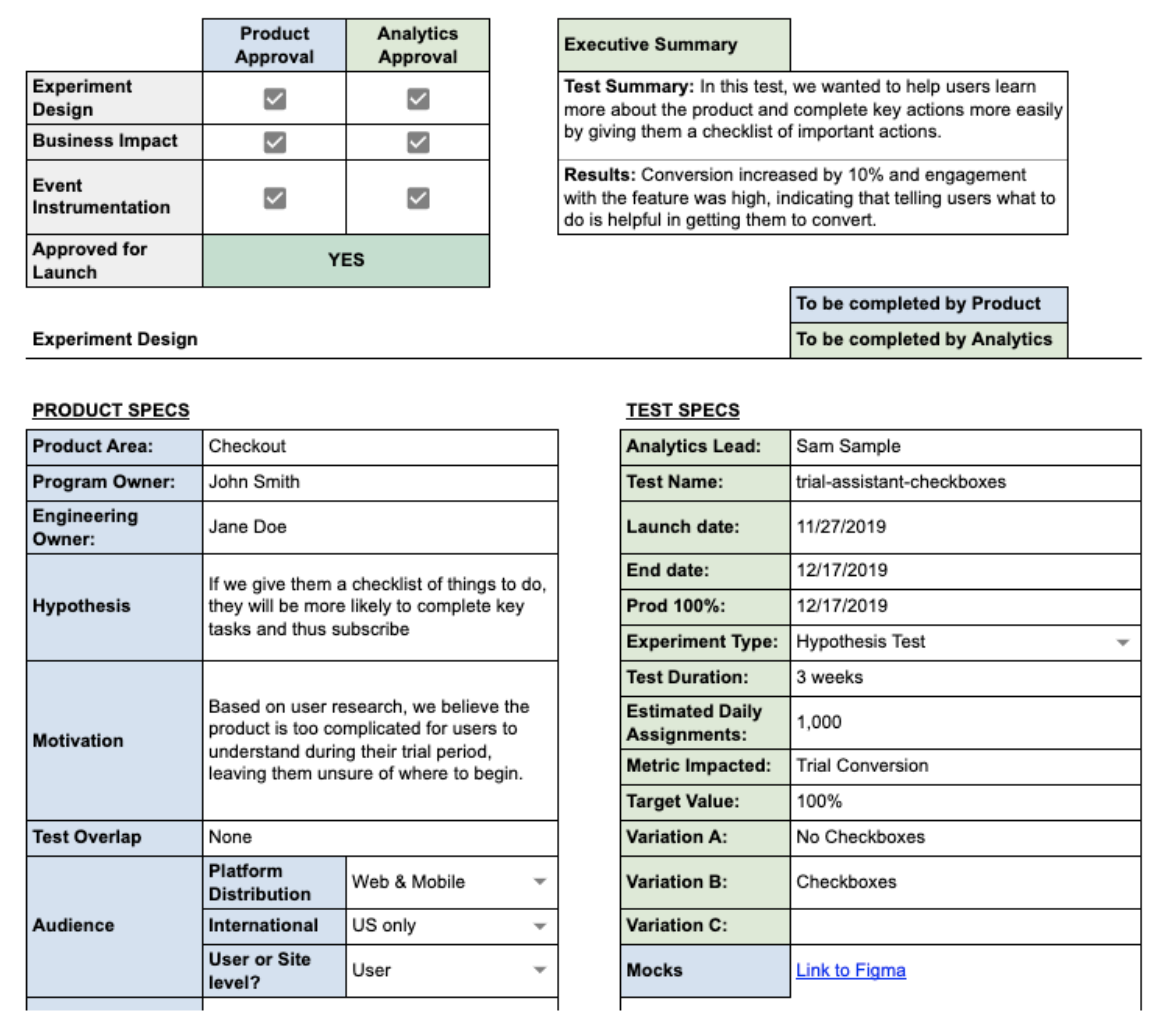

Common Artifacts & Documentation

Consistent training and documentation is a critical part of ensuring the success of this hybrid model.

The A/B test form is the key artifact in our A/B testing process - it is required to launch a product A/B test. Part checklist, part project plan, and part presentation, it walks users through the testing process, from ideation to analyzing results, giving users prompts and checklists to help create and execute a useful test.

The A/B test log offers a complete history of all A/B tests run at Squarespace. This allows for greater transparency, helps avoid conflicts, and enables teams to more easily learn from past tests.

Impact

All this work has led to improvements across multiple areas:

Higher Volume: We’ve gone from specific teams running a handful of tests a quarter to product teams running tests constantly

Faster Turnaround: The time to go from idea to test to conclusion has been cut from months to weeks (or even days in some cases)

Consistent Design: Tests are well-designed, statistically robust, and in a position to succeed

Smarter Decisions: With tests being easier and faster to run, product teams are making more data-driven decisions and understanding more about our users

We believe that access to better tooling and building a culture of experimentation across Squarespace will always be a work in progress. So where do we go from here?

Partnering with product and research teams (e.g. Data Science, Analytics, UX Research) to build robust hypothesis backlogs

Launching new and different types of tests (e.g. multi-armed bandits)

New strategies to evaluate our entire A/B testing program (e.g. universal holdouts)

We hope to share progress in these areas in a future blog post.

Acknowledgements

Multiple teams across Squarespace are involved in A/B testing. Major contributors include Product, Data Science, Product Analytics, Core Services, and Events Pipeline and Amplitude (EPA).